🥕 carrot

Han Wu 吴瀚

I am a Ph.D. student at the School of Biomedical Engineering, ShanghaiTech University, advised by Prof. Dinggang Shen. I obtained my B.Eng. with honors from Wuhan University of Technology (WUT) in 2022. During the initial stage of my Ph.D. (Sept. 2022 - June 2024), I focused on digital dentistry, working closely with Prof. Zhiming Cui. Since 2024, my research interest has shifted toward cardiovascular disease diagnosis and treatment. Currently, I am also a research intern at United Imaging Intelligence (UII), working with Dr. Dijia Wu.My research aims to develop data-driven, computer-aided diagnosis and treatment systems for healthcare applications, with a particular emphasis on cardiovascular and dental health. My work primarily focuses on two technical areas:

Label-Efficient Learning in Medical Images: Developing high-performance models for real-world clinical scenarios that rely on weak or partial annotations.VFM/VLM for Medical Image Analysis: Building foundation models for medical image analysis, including Vision Foundation Models (VFM) for medical image interpretation (e.g., segmentation, landmark detection etc.) and Vision-Language Models (VLM) for image understanding and reasoning.

Education

ShanghaiTech University, Shanghai, China

Ph.D. in Computer Science

Sept. 2022 - Present

Supervisor: Prof. Dinggang Shen

Wuhan University of Technology, Wuhan, China

B.E. in Information Engineering

Sept. 2018 - June 2022

Outstanding Graduates (Postgraduate-Recommendation)

Experience

United Imaging Intellegence, Shanghai, China

Reserach Intern @ R&D

June 2025 - Present

Working with Dr. Dijia Wu.

News

One paper is accepted by IEEE TMI, a nice ending for 2025!

One paper is accepted by IEEE TMI!

One paper is accepted by MedIA!

One paper is accepted by MICCAI 2025 after rebuttal!

Start my Research Intern @ UII, PASSION for CHANGE!

One clinical paper is accepted by Journal of Dentistry!

One paper is early accepted by MICCAI 2025!

My Google Scholar citations have reached 100! 🎈🎈🎈

One paper is accepted by MedIA!

One paper is accepted by IEEE TMI!

One paper is accepted by MICCAI 2024!

Pass PQE and officially become a Ph.D. candidate!

Our survey paper on CLIP in medical imaging is now available online!

One paper is accepted by MICCAI 2023!

Start a new journey at ShanghaiTech!

Graduate from WUT with "Outstanding Graduates" honor!

Graduation thesis is selected as the "Outstanding Graduation Thesis"!

Receive the offer to BME during the 2021 ShanghaiTech BME School Summer Camp!

Publications

* indicates equal contribution and + indicates corresponding author.

Semi-Supervised Landmark Tracking in Echocardiography Video via Spatial-Temporal Co-Training and Perception-Aware Attention

IEEE Transactions on Medical Imaging (IEEE TMI), 2026

Han Wu, Haoyuan Chen, Lin Zhou+, Qi Xu+, Zhiming Cui+, Dinggang Shen+

[PDF]

Abstract: Precise landmark annotation in cardiac ultrasound images is fundamental for quantitative cardiac health assessment. However, the time-intensive nature of manual annotation typically constrains clinicians to annotate only selected key-frames, limiting comprehensive temporal analysis capabilities. While recent automated landmark detection methods have demonstrated success for key-frame analysis, they fail to address the clinical need for continuous temporal evaluation across entire cardiac sequences. To bridge this gap, we present SemiEchoTracker, a novel semi-supervised framework that enables comprehensive landmark tracking throughout echocardiography sequences while requiring supervision only on key-frames. Our framework introduces three key innovations: 1) a co-training mechanism that enforces mutual consistency between spatial detection and temporal tracking, enabling accurate intermediate frame detection without additional annotations, 2) a guided DINOv2 pretraining strategy tailored for extracting fine-grained echocardiography-specific spatial features, and 3) a perception-aware spatial-temporal (PAST) attention module that efficiently captures inter- and intra-frame relationships in echocardiography videos. To the best of our knowledge, this work presents the first end-to-end approach for comprehensive landmark tracking in echocardiography sequences using only key-frame supervision. Extensive validation across multiple cardiac views demonstrates that our method achieves state-of-the-art performance in both detection and tracking tasks. ... See More

IEEE Transactions on Medical Imaging (IEEE TMI), 2026

Han Wu, Haoyuan Chen, Lin Zhou+, Qi Xu+, Zhiming Cui+, Dinggang Shen+

[PDF]

Abstract: Precise landmark annotation in cardiac ultrasound images is fundamental for quantitative cardiac health assessment. However, the time-intensive nature of manual annotation typically constrains clinicians to annotate only selected key-frames, limiting comprehensive temporal analysis capabilities. While recent automated landmark detection methods have demonstrated success for key-frame analysis, they fail to address the clinical need for continuous temporal evaluation across entire cardiac sequences. To bridge this gap, we present SemiEchoTracker, a novel semi-supervised framework that enables comprehensive landmark tracking throughout echocardiography sequences while requiring supervision only on key-frames. Our framework introduces three key innovations: 1) a co-training mechanism that enforces mutual consistency between spatial detection and temporal tracking, enabling accurate intermediate frame detection without additional annotations, 2) a guided DINOv2 pretraining strategy tailored for extracting fine-grained echocardiography-specific spatial features, and 3) a perception-aware spatial-temporal (PAST) attention module that efficiently captures inter- and intra-frame relationships in echocardiography videos. To the best of our knowledge, this work presents the first end-to-end approach for comprehensive landmark tracking in echocardiography sequences using only key-frame supervision. Extensive validation across multiple cardiac views demonstrates that our method achieves state-of-the-art performance in both detection and tracking tasks. ... See More

Dual Cross-image Semantic Consistency with Self-aware Pseudo Labeling for Semi-supervised Medical Image Segmentation

IEEE Transactions on Medical Imaging (IEEE TMI), 2025

Han Wu, Chen Wang, Zhiming Cui+

[PDF] | [CODE]

Abstract: Semi-supervised learning has proven highly effective in tackling the challenge of limited labeled training data in medical image segmentation. In general, current approaches, which rely on intra-image pixel-wise consistency training via pseudo-labeling, overlook the consistency at more comprehensive semantic levels (e.g., object region) and suffer from severe discrepancy of extracted features resulting from an imbalanced number of labeled and unlabeled data. To overcome these limitations, we present a new Dual Cross-image Semantic Consistency (DuCiSC) learning framework, for semi-supervised medical image segmentation. Concretely, beyond enforcing pixel-wise semantic consistency, DuCiSC proposes dual paradigms to encourage region-level semantic consistency across: 1) labeled and unlabeled images; and 2) labeled and fused images, by explicitly aligning their prototypes. Relying on the dual paradigms, DuCiSC can effectively establish consistent cross-image semantics via prototype representations, thereby addressing the feature discrepancy issue. Moreover, we devise a novel self-aware confidence estimation strategy to accurately select reliable pseudo labels, allowing for exploiting the training dynamics of unlabeled data. Our DuCiSC method is extensively validated on four datasets, including two popular binary benchmarks in segmenting the left atrium and pancreas, a multi-class Automatic Cardiac Diagnosis Challenge dataset, and a challenging scenario of segmenting the inferior alveolar nerve that features complicated anatomical structures, showing superior segmentation results over previous state-of-the-art approaches. ... See More

IEEE Transactions on Medical Imaging (IEEE TMI), 2025

Han Wu, Chen Wang, Zhiming Cui+

[PDF] | [CODE]

Abstract: Semi-supervised learning has proven highly effective in tackling the challenge of limited labeled training data in medical image segmentation. In general, current approaches, which rely on intra-image pixel-wise consistency training via pseudo-labeling, overlook the consistency at more comprehensive semantic levels (e.g., object region) and suffer from severe discrepancy of extracted features resulting from an imbalanced number of labeled and unlabeled data. To overcome these limitations, we present a new Dual Cross-image Semantic Consistency (DuCiSC) learning framework, for semi-supervised medical image segmentation. Concretely, beyond enforcing pixel-wise semantic consistency, DuCiSC proposes dual paradigms to encourage region-level semantic consistency across: 1) labeled and unlabeled images; and 2) labeled and fused images, by explicitly aligning their prototypes. Relying on the dual paradigms, DuCiSC can effectively establish consistent cross-image semantics via prototype representations, thereby addressing the feature discrepancy issue. Moreover, we devise a novel self-aware confidence estimation strategy to accurately select reliable pseudo labels, allowing for exploiting the training dynamics of unlabeled data. Our DuCiSC method is extensively validated on four datasets, including two popular binary benchmarks in segmenting the left atrium and pancreas, a multi-class Automatic Cardiac Diagnosis Challenge dataset, and a challenging scenario of segmenting the inferior alveolar nerve that features complicated anatomical structures, showing superior segmentation results over previous state-of-the-art approaches. ... See More

CLIK-Diffusion: Clinical Knowledge-informed Diffusion Model for Tooth Alignment

Medical Image Analysis (MedIA), 2025

Yulong Dou, Han Wu, Changjian Li, Chen Wang, Tong Yang, Min Zhu, Dinggang Shen, Zhiming Cui+

[PDF] | [CODE]

Abstract: Orthodontics aims to rectify misaligned teeth (i.e., malocclusion), affecting both oral health and aesthetics. However, traditional methods for aligning teeth involve tedious and laborious manual procedures that heavily depend on the expertise of dentists, lead- ing to inefficient and prolonged treatment durations. Various automatic methods have been proposed to assist the less experienced dentists. Yet, most existing approaches lack clinical insight and often reduce the problem to directly mapping dental point clouds to transformation matrices. This over-simplification fails to capture the nu- anced requirements of orthodontic treatment, namely that effective alignment of mis- aligned teeth must adhere to specific clinical rules (e.g., Andrews' Six Keys & Six Elements). To bridge this gap, we propose CLIK-Diffusion, a CLInical Knowledge- informed Diffusion model for automatic tooth alignment. CLIK-Diffusion formulates the complex problem of tooth alignment as a more manageable landmark transformation problem, which is further refined into a landmark coordinate generation task. Specif- ically, we first detect landmarks for each tooth based on its specific category and then construct our CLIK-Diffusion to learn the distribution of normal occlusion. To encour- age the integration of essential clinical knowledge, CLIK-Diffusion proposes hierarchi- cal constraints from three paradigms: 1) dental-arch level: to constrain the arrangement of teeth from a global perspective, 2) inter-tooth level: to ensure tight contacts and avoid unnecessary collisions between neighboring teeth, and 3) individual-tooth level: to guarantee the correct orientation of each tooth. Ultimately, CLIK-Diffusion predicts the post-orthodontic landmarks that align with clinical knowledge. Rigid transforma- tions are computed based on the coordinates of pre- and post-orthodontic landmarks and then applied to each tooth. We evaluate our CLIK-Diffusion on various malocclu- sion cases collected in real-world clinics, and demonstrate its exceptional performance and strong applicability in orthodontic treatment, compared with other state-of-the-art methods. ... See More

Medical Image Analysis (MedIA), 2025

Yulong Dou, Han Wu, Changjian Li, Chen Wang, Tong Yang, Min Zhu, Dinggang Shen, Zhiming Cui+

[PDF] | [CODE]

Abstract: Orthodontics aims to rectify misaligned teeth (i.e., malocclusion), affecting both oral health and aesthetics. However, traditional methods for aligning teeth involve tedious and laborious manual procedures that heavily depend on the expertise of dentists, lead- ing to inefficient and prolonged treatment durations. Various automatic methods have been proposed to assist the less experienced dentists. Yet, most existing approaches lack clinical insight and often reduce the problem to directly mapping dental point clouds to transformation matrices. This over-simplification fails to capture the nu- anced requirements of orthodontic treatment, namely that effective alignment of mis- aligned teeth must adhere to specific clinical rules (e.g., Andrews' Six Keys & Six Elements). To bridge this gap, we propose CLIK-Diffusion, a CLInical Knowledge- informed Diffusion model for automatic tooth alignment. CLIK-Diffusion formulates the complex problem of tooth alignment as a more manageable landmark transformation problem, which is further refined into a landmark coordinate generation task. Specif- ically, we first detect landmarks for each tooth based on its specific category and then construct our CLIK-Diffusion to learn the distribution of normal occlusion. To encour- age the integration of essential clinical knowledge, CLIK-Diffusion proposes hierarchi- cal constraints from three paradigms: 1) dental-arch level: to constrain the arrangement of teeth from a global perspective, 2) inter-tooth level: to ensure tight contacts and avoid unnecessary collisions between neighboring teeth, and 3) individual-tooth level: to guarantee the correct orientation of each tooth. Ultimately, CLIK-Diffusion predicts the post-orthodontic landmarks that align with clinical knowledge. Rigid transforma- tions are computed based on the coordinates of pre- and post-orthodontic landmarks and then applied to each tooth. We evaluate our CLIK-Diffusion on various malocclu- sion cases collected in real-world clinics, and demonstrate its exceptional performance and strong applicability in orthodontic treatment, compared with other state-of-the-art methods. ... See More

Fully Automated Evaluation of Condylar Remodeling After Orthognathic Surgery in Skeletal Class II Patients Using Deep Learning and Landmarks

Journal of Dentistry (JoD), 2025

Wei Jia, Han Wu, Lanzhuju Mei, Jiamin Wu, Minjiao Wang+, Zhiming Cui+

[PDF] | [CODE]

Abstract: Condylar remodeling is a key prognostic indicator in maxillofacial surgery for skeletal class II patients. In this study, we present a pioneering approach for fully automated evaluation of postsurgical condylar remodeling in skeletal class II patients. By integrating landmark-guided segmentation and employing the ramus as a stable reference for robust registration, the proposed method demonstrates superior accuracy while improving efficiency by approximately 150 times compared to the manual method. Beyond providing qualitative and quantitative assessments, the resulting patient-specific 3D condylar models enable longitudinal monitoring of postoperative changes, support the early detection of temporomandibular joint (TMJ) dysfunction, and facilitate data-driven personalized management strategies. ... See More

Journal of Dentistry (JoD), 2025

Wei Jia, Han Wu, Lanzhuju Mei, Jiamin Wu, Minjiao Wang+, Zhiming Cui+

[PDF] | [CODE]

Abstract: Condylar remodeling is a key prognostic indicator in maxillofacial surgery for skeletal class II patients. In this study, we present a pioneering approach for fully automated evaluation of postsurgical condylar remodeling in skeletal class II patients. By integrating landmark-guided segmentation and employing the ramus as a stable reference for robust registration, the proposed method demonstrates superior accuracy while improving efficiency by approximately 150 times compared to the manual method. Beyond providing qualitative and quantitative assessments, the resulting patient-specific 3D condylar models enable longitudinal monitoring of postoperative changes, support the early detection of temporomandibular joint (TMJ) dysfunction, and facilitate data-driven personalized management strategies. ... See More

A Semi-Supervised Knowledge Distillation Framework for Left Ventricle Segmentation and Landmark Detection in Echocardiograms

MICCAI 2025

Haoyuan Chen, Yonghao Li, Long Yang, Han Wu, Lin Zhou, Kaicong Sun, Dinggang Shen+

[PDF]

Abstract: Left ventricular segmentation and landmark detection from echocardiograms are routine practices in clinical settings for comprehensive cardiovascular disease evaluation. Recently, deep learning-based models have been developed to interpret echocardiograms. However, existing methods face challenges in handling sparse annotations, limiting their clinical applicability. Additionally, their robustness can be significantly influenced by temporal inconsistency (i.e., abrupt prediction fluctuations between consecutive frames) and inter-task conflict (i.e., detected landmarks deviating from segmentation boundaries). To address these issues, we propose a novel semi-supervised framework that integrates: 1) a knowledge distillation method for generating pseudo labels of the numerous unlabeled frames to improve the performance; 2) a Task-aware Spatial-Temporal Network (TSTNet) along with consistency constraints that enhances robustness by enforcing temporal consistency across frames, and inter-task consistency between segmentation and landmark detection. Experimental results on two datasets (a public dataset with 1,000 subjects and a private dataset with 1,950 subjects) show that the proposed framework significantly outperforms the previous approaches. The source code and dataset will be publicly released upon paper acceptance. ... See More

MICCAI 2025

Haoyuan Chen, Yonghao Li, Long Yang, Han Wu, Lin Zhou, Kaicong Sun, Dinggang Shen+

[PDF]

Abstract: Left ventricular segmentation and landmark detection from echocardiograms are routine practices in clinical settings for comprehensive cardiovascular disease evaluation. Recently, deep learning-based models have been developed to interpret echocardiograms. However, existing methods face challenges in handling sparse annotations, limiting their clinical applicability. Additionally, their robustness can be significantly influenced by temporal inconsistency (i.e., abrupt prediction fluctuations between consecutive frames) and inter-task conflict (i.e., detected landmarks deviating from segmentation boundaries). To address these issues, we propose a novel semi-supervised framework that integrates: 1) a knowledge distillation method for generating pseudo labels of the numerous unlabeled frames to improve the performance; 2) a Task-aware Spatial-Temporal Network (TSTNet) along with consistency constraints that enhances robustness by enforcing temporal consistency across frames, and inter-task consistency between segmentation and landmark detection. Experimental results on two datasets (a public dataset with 1,000 subjects and a private dataset with 1,950 subjects) show that the proposed framework significantly outperforms the previous approaches. The source code and dataset will be publicly released upon paper acceptance. ... See More

Adapting Foundation Model for Dental Caries Detection with Dual-View Co-Training

MICCAI 2025 [Early Accepted (Top 9%)]

Tao Luo*, Han Wu*, Tong Yang, Dinggang Shen, Zhiming Cui+

[PDF] | [CODE]

Abstract: Accurate dental caries detection from panoramic X-rays plays a pivotal role in preventing lesion progression. However, current detection methods often yield suboptimal accuracy due to subtle contrast variations and diverse lesion morphology of dental caries. In this work, inspired by the clinical workflow where dentists systematically combine whole-image screening with detailed tooth-level inspection, we present DVCTNet, a novel Dual-View Co-Training network for accurate dental caries detection. Our DVCTNet starts with employing automated tooth detection to establish complementary global and local views, which are panoramic X-ray images and the cropped tooth images, and pretrained two vision foundation models on the two views respectively. The global-view foundation model serves as the detection backbone, generating region proposals and global features, while the local-view model extracts detailed features from corresponding cropped tooth patches matched by the region proposals. To effectively integrate information from both views, we introduce a Gated Cross-View Attention (GCV-Atten) module that dynamically fuses dual-view features, enhancing the detection pipeline by integrating the fused features back into the detection model for final caries detection. To rigorously evaluate our DVCTNet, we test it on a public dataset and further validate its performance on a newly curated, high-precision dental caries detection dataset, annotated using both intra-oral images and panoramic X-rays for double verification. Experimental results demonstrate DVCTNet's superior performance against existing state-of-the-art (SOTA) methods on both datasets, indicating the clinical applicability of our method. ... See More

MICCAI 2025 [Early Accepted (Top 9%)]

Tao Luo*, Han Wu*, Tong Yang, Dinggang Shen, Zhiming Cui+

[PDF] | [CODE]

Abstract: Accurate dental caries detection from panoramic X-rays plays a pivotal role in preventing lesion progression. However, current detection methods often yield suboptimal accuracy due to subtle contrast variations and diverse lesion morphology of dental caries. In this work, inspired by the clinical workflow where dentists systematically combine whole-image screening with detailed tooth-level inspection, we present DVCTNet, a novel Dual-View Co-Training network for accurate dental caries detection. Our DVCTNet starts with employing automated tooth detection to establish complementary global and local views, which are panoramic X-ray images and the cropped tooth images, and pretrained two vision foundation models on the two views respectively. The global-view foundation model serves as the detection backbone, generating region proposals and global features, while the local-view model extracts detailed features from corresponding cropped tooth patches matched by the region proposals. To effectively integrate information from both views, we introduce a Gated Cross-View Attention (GCV-Atten) module that dynamically fuses dual-view features, enhancing the detection pipeline by integrating the fused features back into the detection model for final caries detection. To rigorously evaluate our DVCTNet, we test it on a public dataset and further validate its performance on a newly curated, high-precision dental caries detection dataset, annotated using both intra-oral images and panoramic X-rays for double verification. Experimental results demonstrate DVCTNet's superior performance against existing state-of-the-art (SOTA) methods on both datasets, indicating the clinical applicability of our method. ... See More

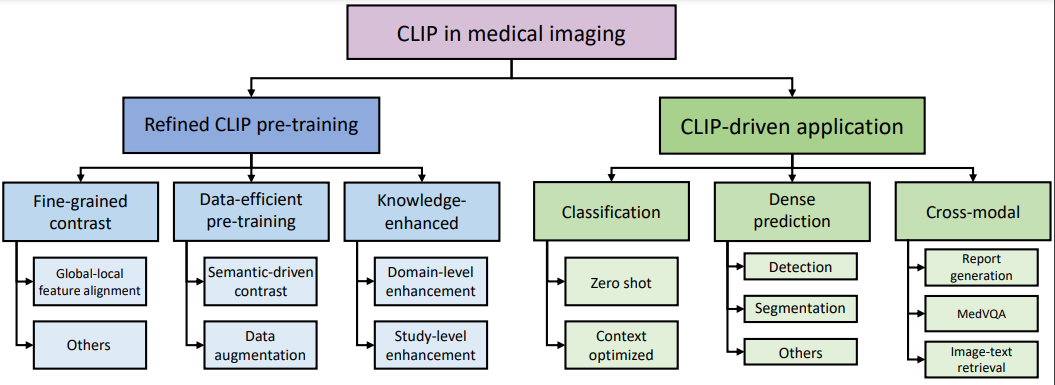

CLIP in Medical Imaging: A Comprehensive Survey

Medical Image Analysis (MedIA), 2025

Zihao Zhao*, Yuxiao Liu*, Han Wu*, Mei Wang, Yonghao Li, Sheng Wang, Lin Teng, Disheng Liu, Zhiming Cui+, Qian Wang+, Dinggang Shen+

[PDF] | [CODE]

Abstract: Contrastive Language-Image Pre-training (CLIP), a simple yet effective pre-training paradigm, successfully introduces text supervision to vision models. It has shown promising results across various tasks due to its generalizability and interpretability. The use of CLIP has recently gained increasing interest in the medical imaging domain, serving as a pre-training paradigm for image-text alignment, or a critical component in diverse clinical tasks. With the aim of facilitating a deeper understanding of this promising direction, this survey offers an in-depth exploration of the CLIP within the domain of medical imaging, regarding both refined CLIP pre-training and CLIP-driven applications. In this paper, (1) we first start with a brief introduction to the fundamentals of CLIP methodology; (2) then we investigate the adaptation of CLIP pre-training in the medical imaging domain, focusing on how to optimize CLIP given characteristics of medical images and reports; (3) furthermore, we explore practical utilization of CLIP pre-trained models in various tasks, including classification, dense prediction, and cross-modal tasks; (4) finally, we discuss existing limitations of CLIP in the context of medical imaging, and propose forward-looking directions to address the demands of medical imaging domain. Studies featuring technical and practical value are both investigated. We expect this comprehensive survey will provide researchers with a holistic understanding of the CLIP paradigm and its potential implications. ... See More

Medical Image Analysis (MedIA), 2025

Zihao Zhao*, Yuxiao Liu*, Han Wu*, Mei Wang, Yonghao Li, Sheng Wang, Lin Teng, Disheng Liu, Zhiming Cui+, Qian Wang+, Dinggang Shen+

[PDF] | [CODE]

Abstract: Contrastive Language-Image Pre-training (CLIP), a simple yet effective pre-training paradigm, successfully introduces text supervision to vision models. It has shown promising results across various tasks due to its generalizability and interpretability. The use of CLIP has recently gained increasing interest in the medical imaging domain, serving as a pre-training paradigm for image-text alignment, or a critical component in diverse clinical tasks. With the aim of facilitating a deeper understanding of this promising direction, this survey offers an in-depth exploration of the CLIP within the domain of medical imaging, regarding both refined CLIP pre-training and CLIP-driven applications. In this paper, (1) we first start with a brief introduction to the fundamentals of CLIP methodology; (2) then we investigate the adaptation of CLIP pre-training in the medical imaging domain, focusing on how to optimize CLIP given characteristics of medical images and reports; (3) furthermore, we explore practical utilization of CLIP pre-trained models in various tasks, including classification, dense prediction, and cross-modal tasks; (4) finally, we discuss existing limitations of CLIP in the context of medical imaging, and propose forward-looking directions to address the demands of medical imaging domain. Studies featuring technical and practical value are both investigated. We expect this comprehensive survey will provide researchers with a holistic understanding of the CLIP paradigm and its potential implications. ... See More

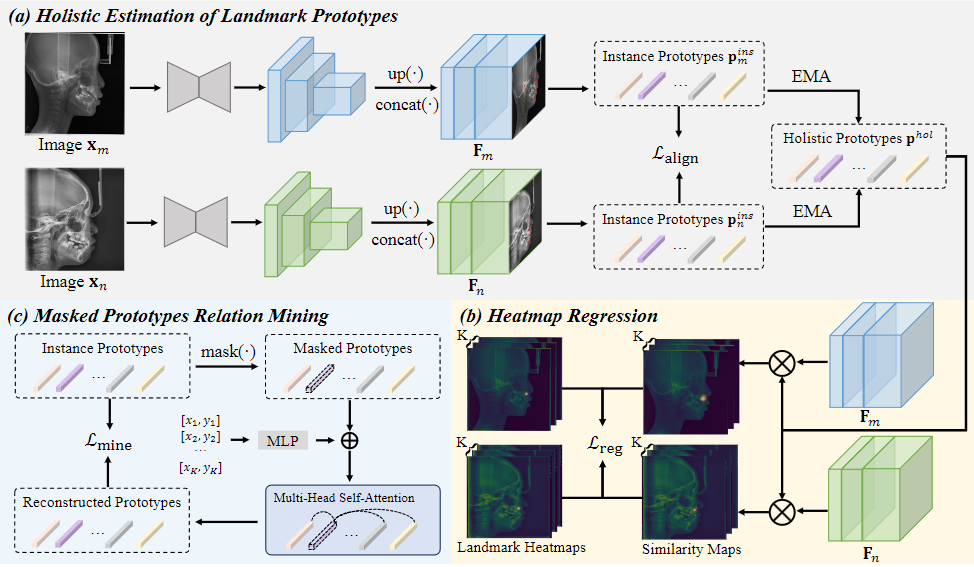

Cephalometric Landmark Detection across Ages with Prototypical Network

MICCAI 2024

Han Wu*, Chong Wang*, Lanzhuju Mei, Tong Yang, Min Zhu, Dinggang Shen, Zhiming Cui+

[PDF] | [CODE] | [POSTER]

| [POSTER]

Abstract: Automated cephalometric landmark detection is crucial in real-world orthodontic diagnosis. Current studies mainly focus on only adult subjects, neglecting the clinically crucial scenario presented by adolescents whose landmarks often exhibit significantly different appearances compared to adults. Hence, an open question arises about how to develop a unified and effective detection algorithm across various age groups, including adolescents and adults. In this paper, we propose CeLDA, the first work for Cephalometric Landmark Detection across Ages. Our method leverages a prototypical network for landmark detection by comparing image features with landmark prototypes. To tackle the appearance discrepancy of landmarks between age groups, we design new strategies for CeLDA to improve prototype alignment and obtain a holistic estimation of landmark prototypes from a large set of training images. Moreover, a novel prototype relation mining paradigm is introduced to exploit the anatomical relations between the landmark prototypes. Extensive experiments validate the superiority of CeLDA in detecting cephalometric landmarks on both adult and adolescent subjects. To our knowledge, this is the first effort toward developing a unified solution and dataset for cephalometric landmark detection across age groups. ... See More

MICCAI 2024

Han Wu*, Chong Wang*, Lanzhuju Mei, Tong Yang, Min Zhu, Dinggang Shen, Zhiming Cui+

[PDF] | [CODE]

| [POSTER]

| [POSTER]

Abstract: Automated cephalometric landmark detection is crucial in real-world orthodontic diagnosis. Current studies mainly focus on only adult subjects, neglecting the clinically crucial scenario presented by adolescents whose landmarks often exhibit significantly different appearances compared to adults. Hence, an open question arises about how to develop a unified and effective detection algorithm across various age groups, including adolescents and adults. In this paper, we propose CeLDA, the first work for Cephalometric Landmark Detection across Ages. Our method leverages a prototypical network for landmark detection by comparing image features with landmark prototypes. To tackle the appearance discrepancy of landmarks between age groups, we design new strategies for CeLDA to improve prototype alignment and obtain a holistic estimation of landmark prototypes from a large set of training images. Moreover, a novel prototype relation mining paradigm is introduced to exploit the anatomical relations between the landmark prototypes. Extensive experiments validate the superiority of CeLDA in detecting cephalometric landmarks on both adult and adolescent subjects. To our knowledge, this is the first effort toward developing a unified solution and dataset for cephalometric landmark detection across age groups. ... See More

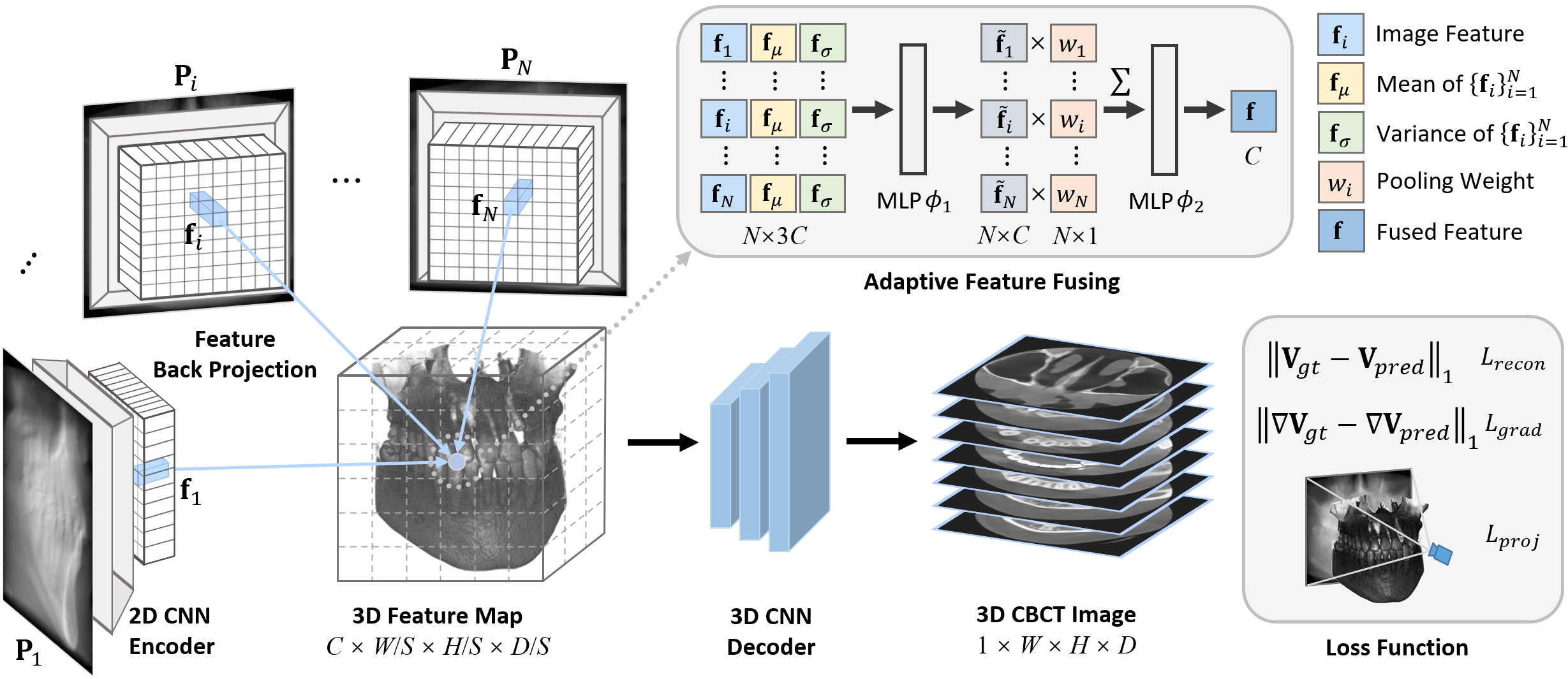

Geometry-Aware Attenuation Learning for Sparse-View CBCT Reconstruction

IEEE Transactions on Medical Imaging (IEEE TMI), 2024

Zhentao Liu, Yu Fang, Changjian Li, Han Wu, Yuan Liu, Dinggang Shen, Zhiming Cui+,

[PDF] | [CODE]

Abstract: Cone Beam Computed Tomography (CBCT) is the most widely used imaging method in dentistry. As hundreds of X-ray projections are needed to reconstruct a high-quality CBCT image (i.e., the attenuation field) in traditional algorithms, sparse-view CBCT reconstruction has become a main focus to reduce radiation dose. Several attempts have been made to solve it while still suffering from insufficient data or poor generalization ability for novel patients. This paper proposes a novel attenuation field encoder-decoder framework by first encoding the volumetric feature from multi-view X-ray projections, then decoding it into the desired attenuation field. The key insight is when building the volumetric feature, we comply with the multi-view CBCT reconstruction nature and emphasize the view consistency property by geometry-aware spatial feature querying and adaptive feature fusing. Moreover, the prior knowledge information learned from data population guarantees our generalization ability when dealing with sparse view input. Comprehensive evaluations have demonstrated the superiority in terms of reconstruction quality, and the downstream application further validates the feasibility of our method in real-world clinics. ... See More

IEEE Transactions on Medical Imaging (IEEE TMI), 2024

Zhentao Liu, Yu Fang, Changjian Li, Han Wu, Yuan Liu, Dinggang Shen, Zhiming Cui+,

[PDF] | [CODE]

Abstract: Cone Beam Computed Tomography (CBCT) is the most widely used imaging method in dentistry. As hundreds of X-ray projections are needed to reconstruct a high-quality CBCT image (i.e., the attenuation field) in traditional algorithms, sparse-view CBCT reconstruction has become a main focus to reduce radiation dose. Several attempts have been made to solve it while still suffering from insufficient data or poor generalization ability for novel patients. This paper proposes a novel attenuation field encoder-decoder framework by first encoding the volumetric feature from multi-view X-ray projections, then decoding it into the desired attenuation field. The key insight is when building the volumetric feature, we comply with the multi-view CBCT reconstruction nature and emphasize the view consistency property by geometry-aware spatial feature querying and adaptive feature fusing. Moreover, the prior knowledge information learned from data population guarantees our generalization ability when dealing with sparse view input. Comprehensive evaluations have demonstrated the superiority in terms of reconstruction quality, and the downstream application further validates the feasibility of our method in real-world clinics. ... See More

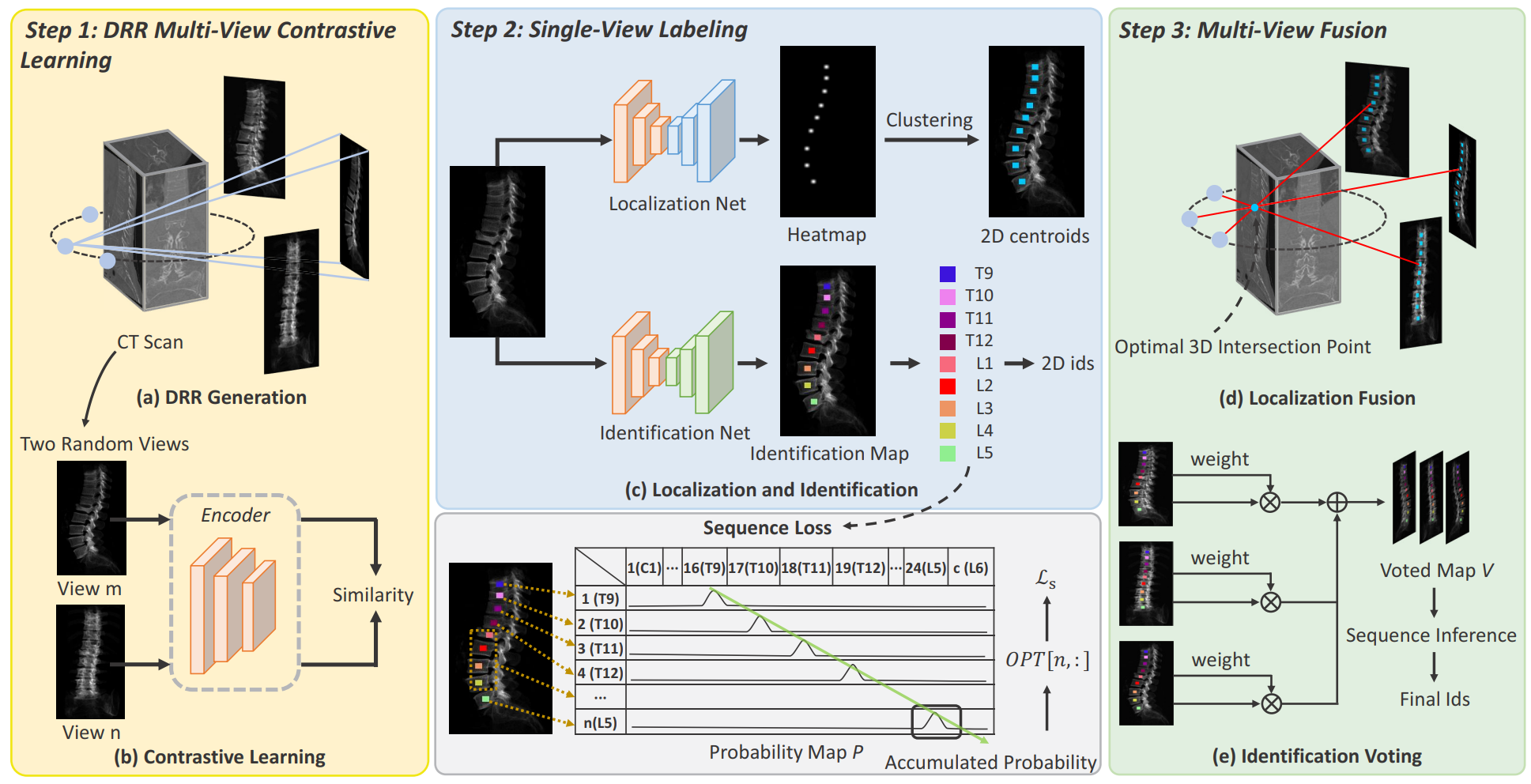

Multi-View Vertebra Localization and Identification from CT Images

MICCAI 2023

Han Wu, Jiadong Zhang, Yu Fang, Zhentao Liu, Nizhuan Wang, Zhiming Cui+, Dinggang Shen+

[PDF] | [CODE] | [SUPP]

| [SLIDES]

| [POSTER]

| [SUPP]

| [SLIDES]

| [POSTER]

Abstract: Accurately localizing and identifying vertebra from CT images is crucial for various clinical applications. However, most existing efforts are performed on 3D with cropping patch operation, suffering from the large computation costs and limited global information. In this paper, we propose a multi-view vertebra localization and identification from CT images, converting the 3D problem into a 2D localization and identification task on different views. Without the limitation of the 3D cropped patch, our method can learn the multi-view global information naturally. Moreover, to better capture the anatomical structure information from different view perspectives, a multi-view contrastive learning strategy is developed to pre-train the backbone. Additionally, we further propose a Sequence Loss to maintain the sequential structure embedded along the vertebrae. Evaluation results demonstrate that, with only two 2D networks, our method can localize and identify vertebrae in CT images accurately, and outperforms the state-of-the-art methods consistently ... See More

MICCAI 2023

Han Wu, Jiadong Zhang, Yu Fang, Zhentao Liu, Nizhuan Wang, Zhiming Cui+, Dinggang Shen+

[PDF] | [CODE]

| [SUPP]

| [SLIDES]

| [POSTER]

| [SUPP]

| [SLIDES]

| [POSTER]

Abstract: Accurately localizing and identifying vertebra from CT images is crucial for various clinical applications. However, most existing efforts are performed on 3D with cropping patch operation, suffering from the large computation costs and limited global information. In this paper, we propose a multi-view vertebra localization and identification from CT images, converting the 3D problem into a 2D localization and identification task on different views. Without the limitation of the 3D cropped patch, our method can learn the multi-view global information naturally. Moreover, to better capture the anatomical structure information from different view perspectives, a multi-view contrastive learning strategy is developed to pre-train the backbone. Additionally, we further propose a Sequence Loss to maintain the sequential structure embedded along the vertebrae. Evaluation results demonstrate that, with only two 2D networks, our method can localize and identify vertebrae in CT images accurately, and outperforms the state-of-the-art methods consistently ... See More

PREPRINT

-

Two-Stage Robust 3D CTA–2D DSA Alignment via Vascular-aware Rigid and Pyramid-Based Hierarchical Non-rigid Registration

Xiaosong Xiong, Caiwen Jiang, Han Wu, Xiao Zhang, Peng Wu, Yanli Song, Xinyi Zhang, Dijia Wu, Dinggang Shen+

Medical Image Analysis (MedIA). (Under Review) -

COMET: Controllable Orthodontic Video Editing with Multi-view Estimation

Lanzhuju Mei, Yulong Dou, Han Wu, Guo Chen, Zhiming Cui, Dinggang Shen+

IEEE Transaction on Visualization and Computer Graphics (IEEE TVCG). (Under Review)

Awards

- Outstanding Student of ShanghaiTech (Top 10%), 2023.

- Outstanding Graduates of WUT, 2022.

- Outstanding Graduation Thesis of WUT (top 4%), 2022.

- 2nd National Prize of National Undergraduate Engineering Practice and Innovation Competition, 2021.

- 3rd National Prize of The 10th "China Software Cup" College Student Software Design Competition, 2021.

- 1st Prize of Undergraduate Engineering Practice and Innovation Competition Hubei Prov., 2021.

- 3rd Prize of National University Student Intelligent Car Race, Hubei Prov., 2021.

- 3rd Prize of National Undergraduate Computer Design Competition, Hubei Prov., 2021.

- Ranked 27/169 in The 10th "China Software Cup" College Student Software Design Competition Online Tournament, 2021.

- 3rd Prize of The 6th "Internet+" Innovation and Entrepreneurship Competition, Hubei Prov., 2020.

- Ranked 3/218 in National University Student Intelligent Car Race Online Tournament: Crowd Counting, 2020.

- Ranked 15/332 in Baidu AIStudio Regular Season of Bugs Detection, 2020.

Services

Conference Reviewers

- International Conference on Machine Learning in Medical Imaging (MLMI) 2025

- International Conference on Machine Learning in Medical Imaging (MLMI) 2024

- International Conference on Machine Learning in Medical Imaging (MLMI) 2023

- International Conference on Machine Learning in Medical Imaging (MLMI) 2022

- International Conference on Pattern Recognition (ICPR) 2022

Journal Reviewers

Membership

Teaching Assistant

- BME2113 Algorithms Design And Analysis (Python) @ ShanghaiTech, 2023 Fall

Other Services

- BaiDu PaddlePaddle Developer Expert (PPDE)

Invited Talks

Knowledge-driven Landmark Detection in Medical Images

Miscellaneous

- I'm a big fan of Taylor Swift, Jay Chou, and JJ Lin.

- I love playing guitar whenever I feel stressed. Masaaki Kishibe is my favourite guitarist.

- I enjoy playing LOL with my friends. I was also a Master player in LOLM.

Contact

wuhan2022 [at] shanghaitech.edu.cn

Address

393 Middle Huaxia Road, Pudong, Shanghai, China

Office

IDEA Lab @ BME Building, ShanghaiTech University, Shanghai, China